I am a First Year Master's student in the Institute for Computational and Mathematical Engineering (ICME) at Stanford University. Previously, I was a Research Fellow at Microsoft Research India, where I worked with Dr. Aditya Kanade, Dr. Nagarajan Natarajan, and Dr. Abhijeet Awasthi in the AI4CODE team.

My research focuses on understanding and advancing the capabilities of Large Language Models (LLMs) across multiple dimensions. Broadly, my interests lie in two areas: 1. Architectural Innovations — developing approximate mathematical models and interpretability tools to better understand how LLMs function, and exploring architectural modifications to improve their efficiency and performance; 2. AI4CODE — enhancing LLMs for code generation by incorporating structured, human-like reasoning to enable more reliable and explainable problem solving.

I earned my B.Tech. in Mathematics and Computing from IIT Goa, India, in 2023. For more details about my background, see my CV. If you'd like to discuss my work or research interests, feel free to get in touch.

Experience

-- Created a benchmark to evaluate the abilities of LLMs in generating functionally correct and optimized code.

-- Fine-tuned LLMs for code-editing, achieving up to 15–20% improvement and creating pipeline for synthetic

data-generation using GPT-4 and Llama, presented this work at ICLR25 Singapore.

-- Worked on improving the reasoning of multimodal LLMs.

-- Automated process of allocating drivers optimally to Metro trains by formulating constraints in Gurobipy solver.

-- Restructured the problem using Max Flows reducing timetable preparation time from few days to a few seconds.

-- Deployed the algorithm in Bengaluru Metro Rail Corporation Limited (BMRCL).

-- Contributed to the backend of the Questa compiler in C, optimized coverage calculations to achieve a 3x improvement.

-- Designed 50+ test cases, identifying and resolving 10+ JIRA issues, significantly improving the system reliability.

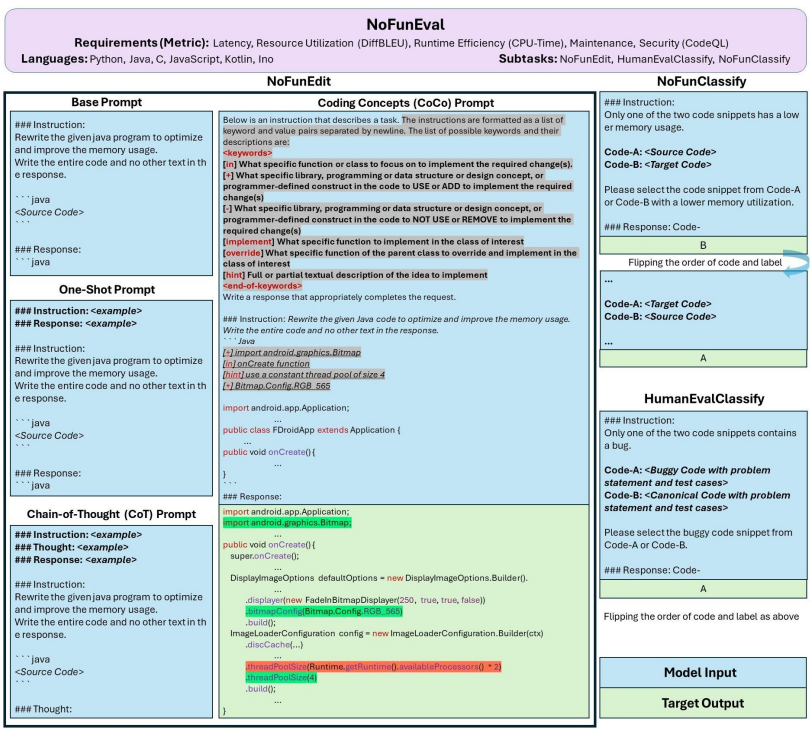

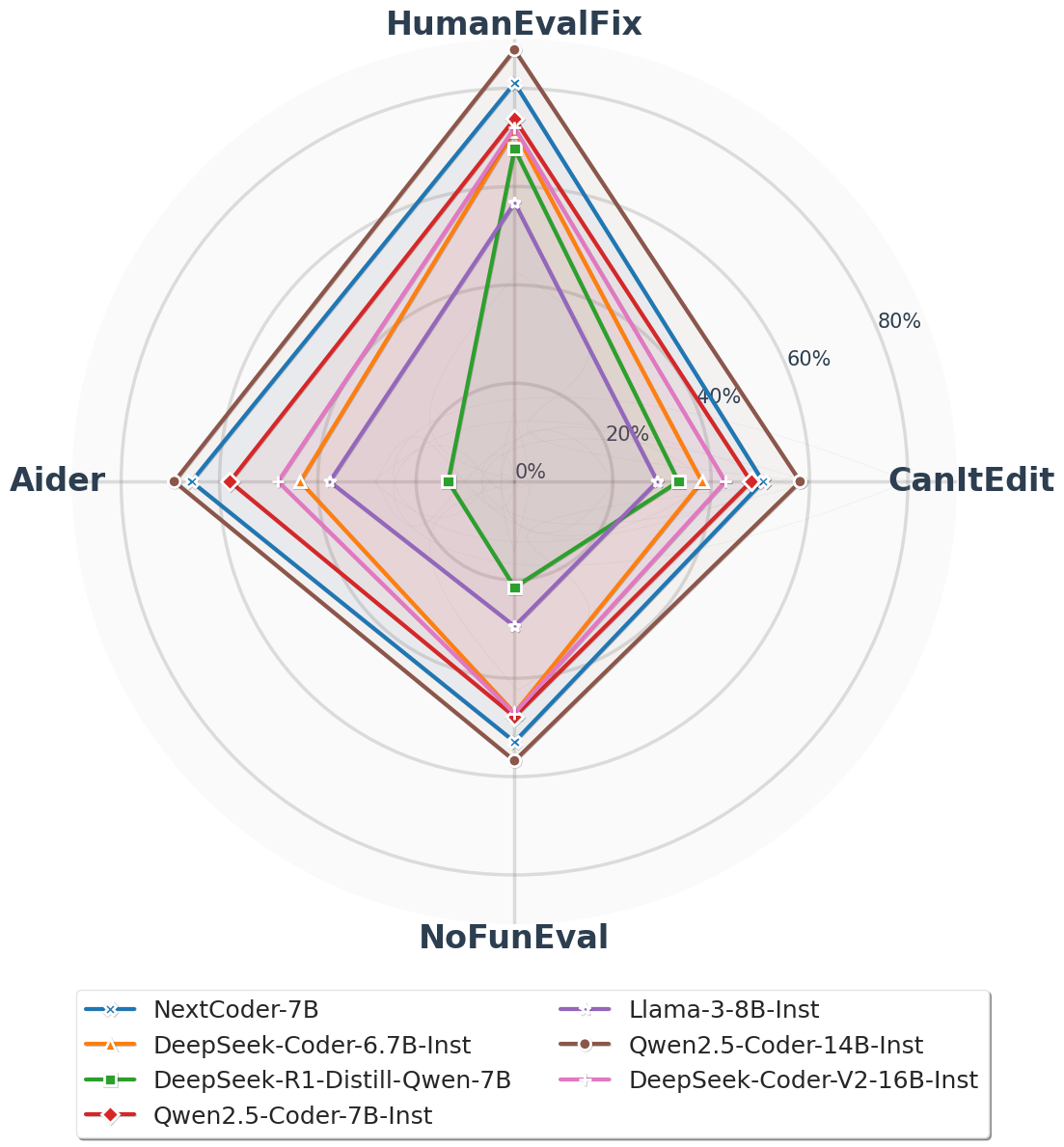

NoFunEval: Funny How Code LMs Falter on Requirements Beyond Functional Correctness

Manav Singhal, , Abhijeet Awasthi, Nagarajan Natarajan, Aditya Kanade

COLM'24 PDF

Robust Learning of Diverse Code Edits

, Swayam Singh, Abhijeet Awasthi, Aditya Kanade, Nagarajan Natarajan

DL4C @ ICLR'25, ICML'25 PDF

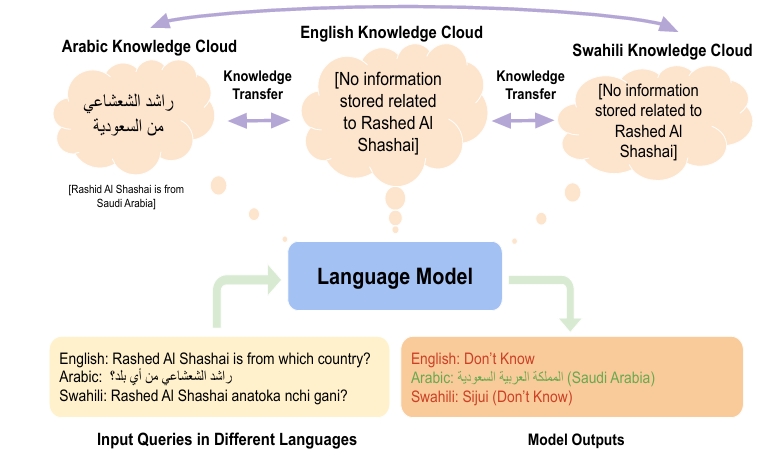

Language Models' Factuality Depends on the Language of Inquiry

, Kumar Tanmay, Ayush Agrawal, Kumar Ayush, Hamid Palangi, Paul Pu Liang

Arxiv Preprint PDF

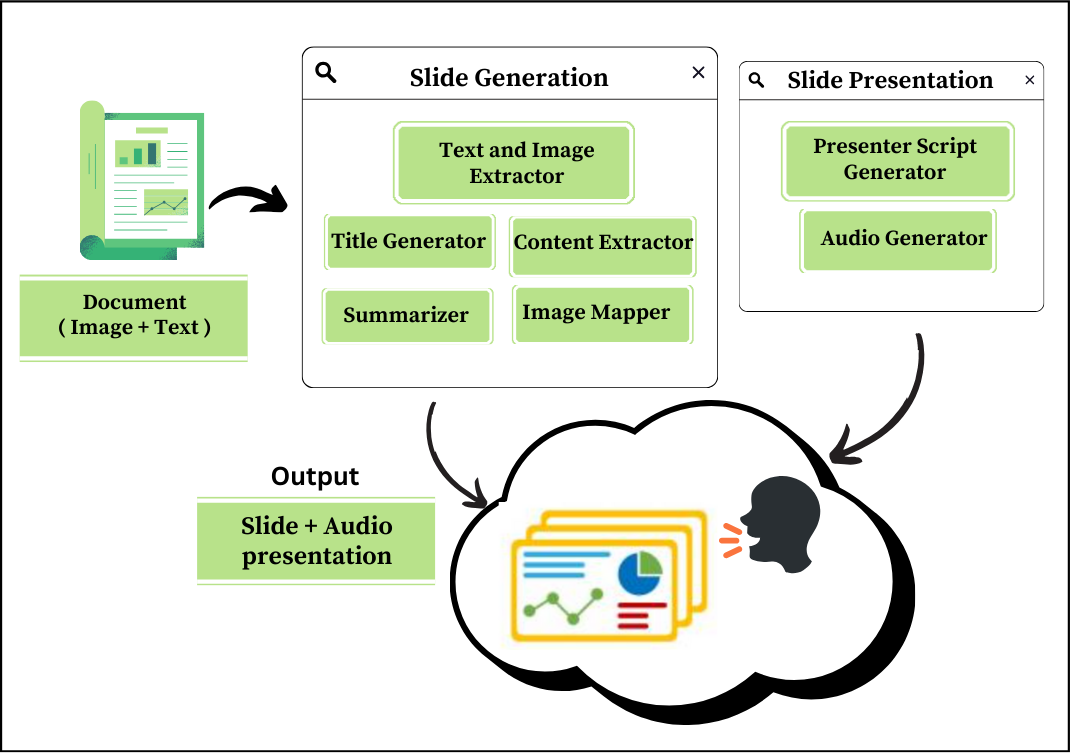

PASS: Presentation Automation for Slide Generation and Speech

, Aarohi Bhand

Arxiv Preprint PDF